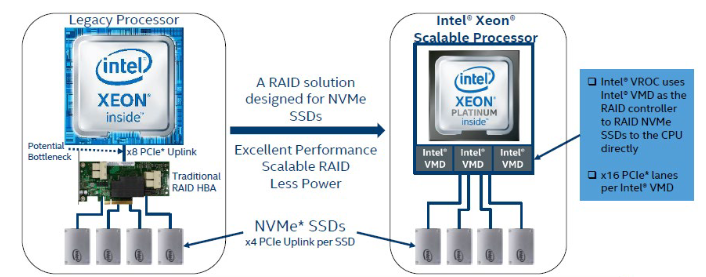

The world of data storage is constantly evolving, with technology advancements aiming to maximize performance and efficiency in data center operations. One of the recent innovations in storage technology is Intel’s Virtual RAID on CPU (VROC), which has gained traction among IT professionals and enthusiasts. This article will compare the use of VROC as a boot device to the more traditional RAID controller in a VMware vSAN Ready Node, highlighting the advantages and disadvantages of each approach.

VROC: The New Kid on the Block

Intel VROC is a hybrid software/hardware-based RAID solution that utilizes the CPU for RAID processing rather than a dedicated hardware RAID controller. VROC can be configured using NVMe SSDs (as well as SATA SSDs), offering high-performance storage with lower latency compared to traditional RAID controllers. Let’s dive into some of the advantages and disadvantages of using VROC as a boot device in a vSAN Ready Node.

Advantages of VROC

Performance: VROC allows for better performance and reduced latency by eliminating the need for a dedicated RAID controller. This results in faster data processing and retrieval, which is crucial in virtualized environments.

Scalability: With VROC, you can easily expand your storage capacity by adding NVMe SSDs without the need for additional RAID controllers. This enables seamless growth of your vSAN Ready Node as your storage needs increase.

Cost Savings: VROC can reduce the cost of your vSAN Ready Node by eliminating the need for additional RAID controllers. Furthermore, as a software-based solution, VROC can leverage existing hardware resources, resulting in lower capital expenditures.

Traditional RAID Controller: Tried and Tested

A traditional RAID controller is a dedicated hardware component responsible for managing storage arrays and ensuring data redundancy. These controllers have been widely used in data centers for decades, providing a reliable and stable solution for storage management. Here are some advantages and disadvantages of using traditional RAID controllers as boot devices in vSAN Ready Nodes.

Advantages of Traditional RAID Controllers

Familiarity: Traditional RAID controllers are well-known and widely understood by IT administrators, making them a comfortable and familiar choice for managing storage in vSAN Ready Nodes.

Hardware Independence: Unlike VROC, traditional RAID controllers do not tie you to specific hardware vendors, allowing for more flexibility in hardware selection.

Conclusion

Choosing between VROC and traditional RAID controllers for boot devices in vSAN Ready Nodes ultimately depends on your organization’s priorities and requirements. VROC offers better performance, scalability, and cost savings but comes with vendor lock-in and increased complexity. On the other hand, traditional RAID controllers provide familiarity and hardware independence but may fall short in terms of performance and cost-efficiency.

It is essential to carefully evaluate the specific needs of your environment before deciding which solution is best suited for your vSAN Ready Node. By considering factors such as performance, scalability, cost, and ease of management, you can make an informed decision that will optimize your VMware vSAN Ready Node for long-term success. As storage technologies continue to evolve, staying abreast of new developments, such as VROC, can help ensure your organization remains agile and well-prepared to adapt to the ever-changing data center landscape. Ultimately, the choice between VROC and traditional RAID controllers should be guided by a thorough understanding of your specific storage needs, allowing you to maximize performance and efficiency in your virtualized environment.