Many times over the past few months I have been asked about the benefits of using Intel Optane NVMe in a vSAN environment, although there was marketing material from Intel that boasted a good performance boost I decided (purely out of curiosity) to do some performance benchmarking and compare Optane as the cache devices versus SAS as the cache devices. The performance benchmark test used exactly the same servers and networking in order to provide a level playing field, the only thing that was changed was the cache devices being used in the disk groups.

Server Specification:

- 6x Dell PowerEdge R730xd

- Intel Xeon CPU E5-2630 v3 @ 2.40GHz

- 128GB RAM

- 2x Dell PERC H730 Controllers

- 2x Intel Dual Port 10Gb ethernet adapters (Configured with LACP)

Disk group config for the SAS test:

- 3x Disk Groups

- 3x 400GB SAS SSD per disk group

- 1x 400GB SAS SSD per disk group

Disk group config for the Optane test

- 2x Disk Groups

- 3x 400GB SAS SSD per disk group

- 1x 750GB Optane NVMe P4800X per disk group

Whilst you could say that the configurations are not identical, since the Write Buffer is limited to 600GB per disk group then both configurations have the same amount of write buffer, the SAS config has more backend disks which would serve as an advantage.

For the purpose of the Benchmark, we used HCI Bench to automate the Oracle VDBench workload testing and each test was based on the following, the test was designed to max-out the system hence the high number of VMDKs used here (250)

- 50 Virtual Machines

- 5 VMDKs per virtual machine

- 2 threads per VMDK

- 20% working set

- 4k, 8k, 16k, 32k, 64k and 128k block size

- 0%, 30%, 70%, 100% write workload

- 900 second test time for each test

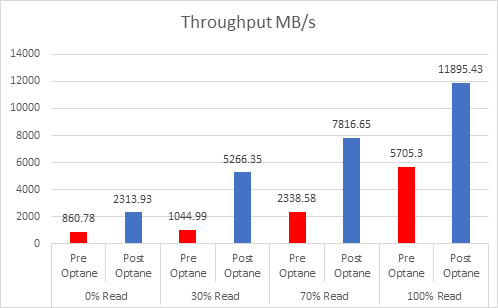

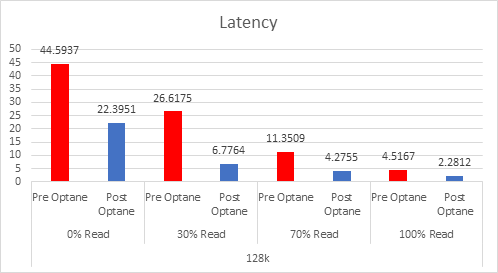

So what were the results?

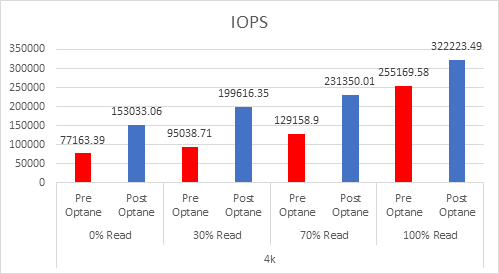

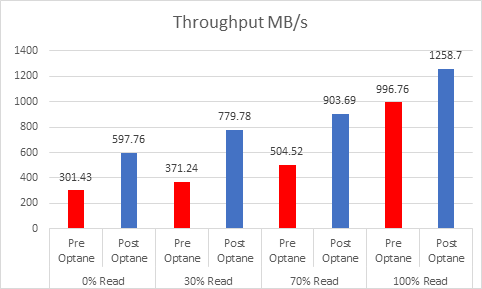

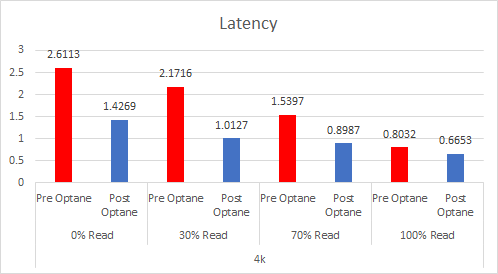

4K Blocksize:

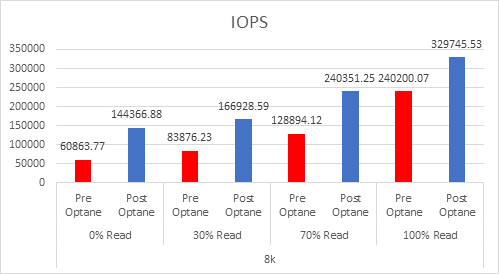

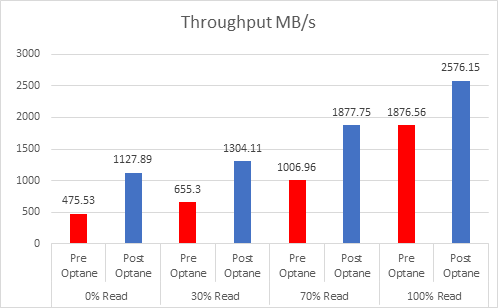

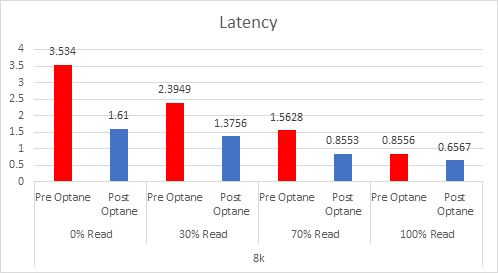

8K Blocksize:

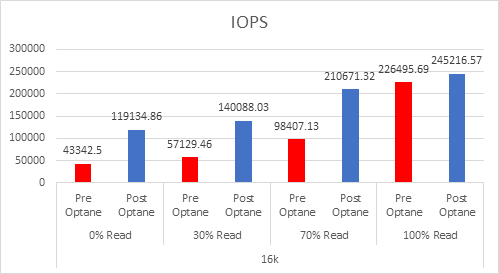

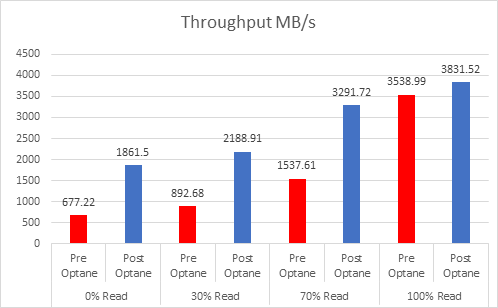

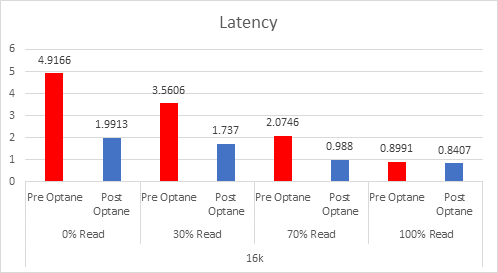

16K Blocksize:

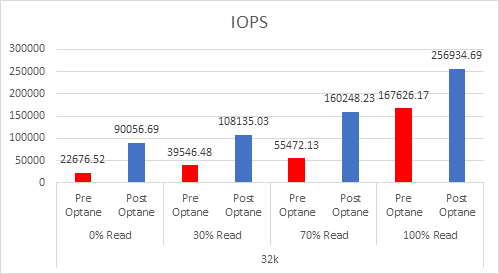

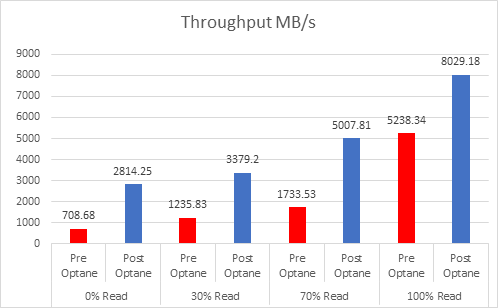

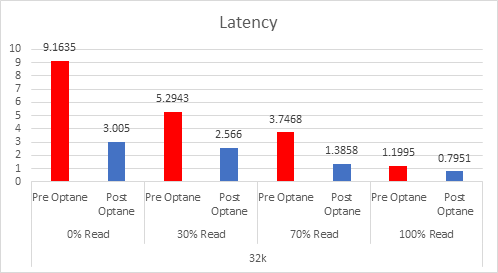

32K Blocksize:

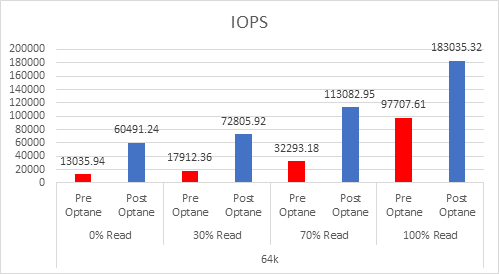

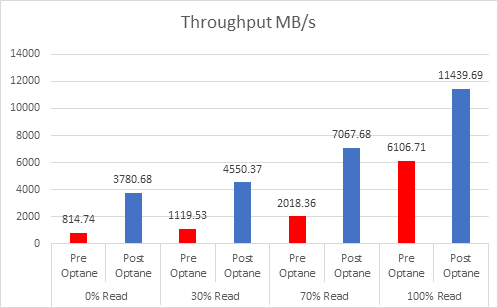

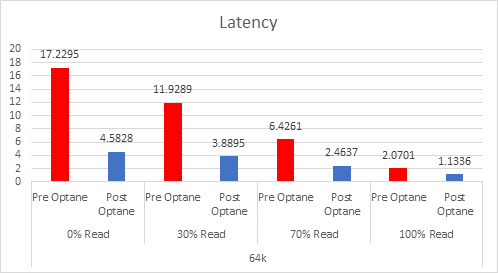

64K Blocksize:

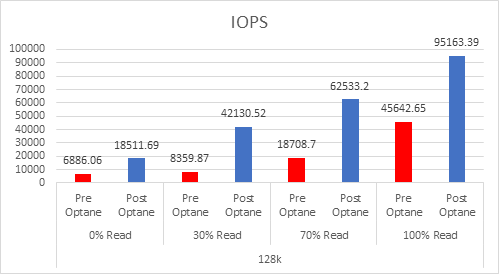

128K Blocksize:

As you can see Optane really did boost the performance even though the server platform wasn’t the ideal platform for the Optane devices (Dell said those cards will not be certified in the 13G platform), however despite the fact that the workload was designed to max-out the system, in some cases latency was reduced to almost a third and throughput was was increased in some cases to 3x.

Conclusion: Optane really does live up to expectations, and it isn’t just marketing, I have yet to test a full NVMe system to see how much it can really be pushed, but I hope the numbers above go someway to convice you why you should consider optane as the cache tier in vSAN.

Great write up Mr vSAN. Thanks for doing this and showing how Optane stacks up to traditional NAND SSDs!