When sizing a vSAN environment there are many considerations to take into account, and with the launch of the new vSAN Sizing tool I thought I would take time and write up what questions I commonly ask people in order to get an understanding of what they want to run on vSAN as well as a scope of requirements that meet that workload.

Capacity

Obviously capacity is going to be our baseline for any sizing activity, no matter what we achieve with the other requirements, we have to meet a usable capacity, remember we should always work off a usable capacity for any sizing, a RAW capacity does not take into account any Failure Tolerance Methodology, Erasure Coding or Dedupe/Compression, this is something we will cover a bit later in this article.

Capacity should also include the required Swap File space for each of the VMs that the environment is being scoped for.

IOPS

I have been involved in many discussions where it is totally unknown what the performance requirements are going to be, so many times I have been told “We want the fastest performance possible” without being told what the current IOPS requirement is, to put it into context what is the point in buying a 200mph sports car when the requirement is to drive at 70mph max!

IOPS requirement plays a key part in determining what level of vSAN Ready Node specification is required, for example if a total IOPS requirement is 300,000 IOPS out of a 10 Node cluster, is there much point spending more money on an All-Flash configuration that delivers 150,000 IOPS Per node? Simple answer…No! You could opt for a lower vSAN All-Flash Ready Node config that meets the requirements a lot closer and still offer

room for expansion in the future.

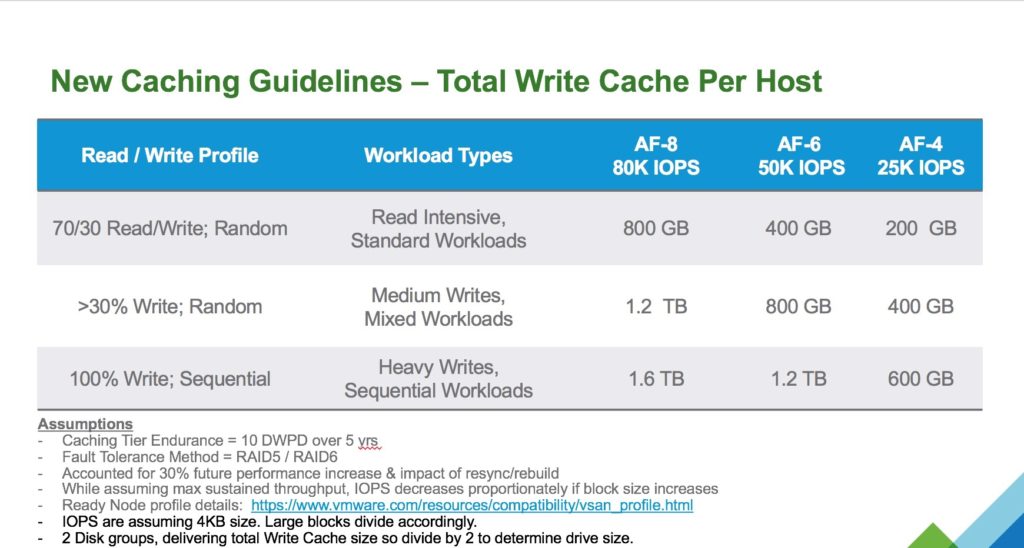

Workload Type

This is a pretty important requirement, for example if your workload is more of a write intensive workload then this would change the cache requirements, it may also require a more write intensive flash technology such as NVMe for example. If you have different workload types going onto the same cluster it would be worthwhile categorizing those workloads into four categories:-

- 70/30 Read/Write

- 80/20 Read/Write

- 90/10 Read/Write

- 50/50 Read/Write

Having the VMs in categories will allow you to specify the workload types in the sizing tool (in the advanced options).

vCPU to Physical Core count

This is something that gets overlooked not from a requirement perspective, but people are so used to sizing based on a “VM Per Host” scenario which with the increasing CPU core counts does not fit that model any more, even the new sizing tool bases it on vCPU to Physical Core ratio which makes things a lot easier, most customers I Talk to who are performing a refresh of servers with either 12 or 14 core processors can lower the amount of servers required by increasing the core count on the new servers, thus allowing you to run more vCPUs on a single host.

List of questions for requirements for each workload type

- Average VMDK Size per VM

- Average number of VMDKs per VM

- Average number of vCPU per VM

- Average vRAM per VM

- Average IOPS Requirement per VM

- Number of VMs

- vCPU to Physical Core Ratio

RAW Capacity versus Usable Capacity, how much do I actually need?

The new sizing tool takes all your requirements into account, even the RAID levels, Dedupe/Compression ratios etc and returns with a RAW Capacity requirement based on the data you enter, if you are like me and prefer to do it quick and dirty, below is table showing you how to work out based on a requirement of 100TB of usable (Including Swap File Space), based on a standard cluster with no stretched capacilities it looks like this:

| FTT Level | FTT Method | Min number of hosts | Multiplication Factor | RAW Capacity Based on 100TB Usable |

|---|---|---|---|---|

| FTT=0 | None | N/A | 1x | 100TB |

| FTT=1 | Mirror | 3 | 2x | 200TB |

| FTT=2 | Mirror | 5 | 3x | 300TB |

| FTT=3 | Mirror | 7 | 4x | 400TB |

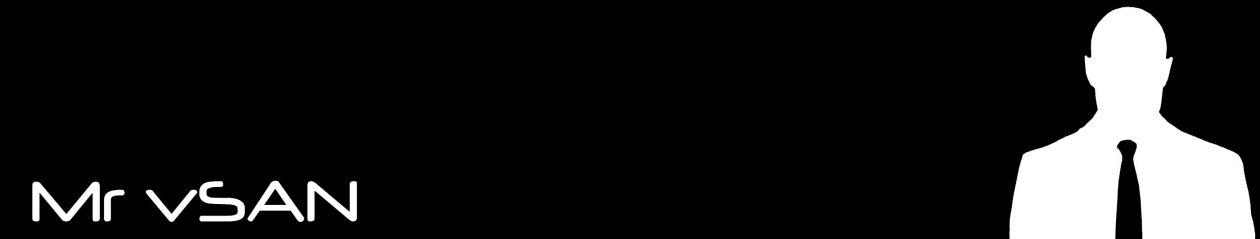

| FTT=1 | RAID5 | 4 | 1.33x | 133TB |

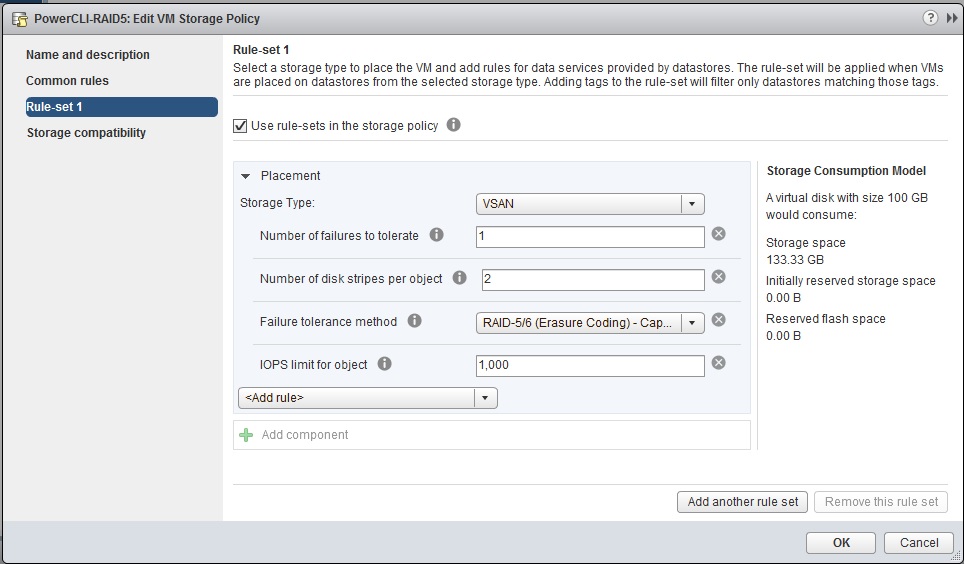

| FTT=2 | RAID6 | 6 | 1.5x | 150TB |

Now in vSAN 6.6 VMware introduced localized protection (Secondary FTT) and the ability to include or not include specific objects from the stretched cluster (Primary FTT), below is a table showing what the RAW Capacity requirements are based on the two FTT levels

| Primary FTT Level | Secondary FTT Level | Secondary FTT Method | Min Number of hosts per site | RAW Capacity Based on 100TB Usable |

|---|---|---|---|---|

| PFTT=1 | SFTT=0 | RAID0 | 1 | 200TB |

| PFTT=1 | SFTT=1 | RAID1 | 3 | 400TB |

| PFTT=1 | SFTT=1 | RAID5 | 4 | 266TB |

| PFTT=1 | SFTT=2 | RAID6 | 6 | 300TB |

Mixed FTT levels and FTT Methods

Because vSAN is truly a Software Defined Storage Platform, this means that you can have a mixture of VMs/Objects with varying levels of protection and FTT Methods, for example for Read intensive workloads you may choose to have RAID 5 in the storage policy, and for more write intensive workloads a RAID 1 policy, they can all co-exist on the same vSAN Cluster/Datastore perfectly well, and the new sizing tool allows you to specify different Protection Levels and Methods for each workload type.