I speak with my customers and colleagues about this very topic, so I thought it was about time I wrote a post on how to power down a Virtual SAN cluster the correct way after all there may be countless reasons why you need to do this, from Scheduled Power Outages to Essential Building Maintenance.? There are a number of factors that have to be taken into account one major factor is when your Virtual Center server is actually running on the Virtual SAN cluster you wish to power down, in order to write this blog post I performed the actions on an 8-Node All-Flash cluster where the Virtual Center Appliance is also running within the Virtual SAN environment.

In order to perform the shutdown of the cluster you will need access to

- The vCenter Web Client

- Access to the vCenter

- SSH Access to the hosts, or access to the VMware Host Client

Step 1 – Powering off the Virtual Machines

Powering off the virtual machines can be done in multiple ways, the easiest way is through the WebUI, especially if you have a large number of virtual machines.? There are other ways that virtual machines can be powered off such as:

- PowerCLI

- PowerShell

- vim-cmd directly on the ESXi hosts

Step 2 – Powering down the Virtual Center Server

Step 2 – Powering down the Virtual Center Server

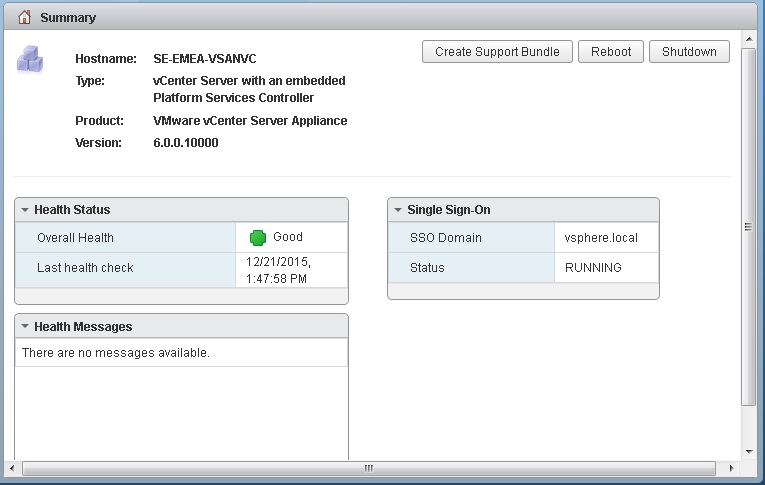

If your vCenter Server is running on your Virtual SAN datastore like my cluster then you will have to power down the vCenter Server after powering down the other virtual machines, if you are using the Windows vCenter Server you can simply RDP to the server and shut down the Windows Server itself, if you are like me and using the vCenter Appliance you can simply point a web browser to the vCenter IP or Fully Qualified Domain Name and Port 5480 and log in as the root user, from within there you will have the option to Shutdown the vCenter Appliance

Step 3 – Place the hosts into maintenance mode

Because our vCenter server is powered down, we won’t obviously be able to do this through the vCenter Web Client, so what are our easiest alternatives, well we can SSH into each host one at a time and run the command

# esxcli system maintenanceMode set -e -m noAction

The above command will place the host into maintenance mode and specifying “Do Nothing” as the response to the migration or ensuring that data will be accessible in the VSAN cluster, the reason behind this is that your virtual machines are powered off now anyway.

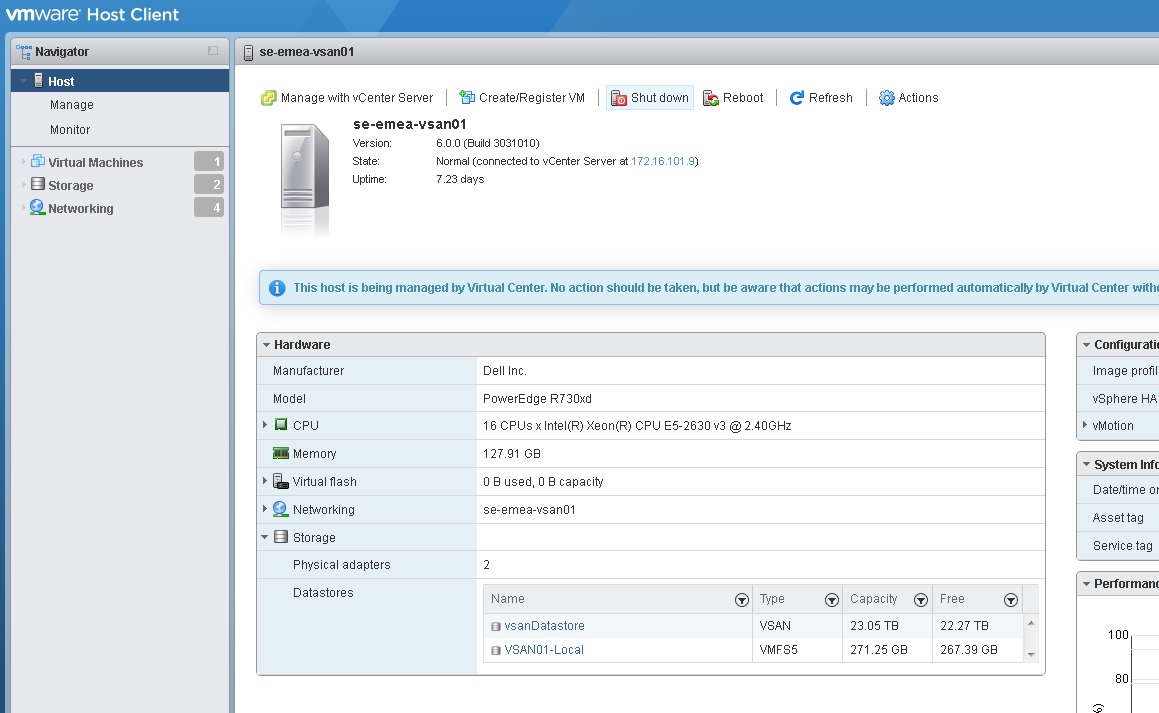

One of the other options and I would highly recommend this option is the VMware Host Client, this is basically a vib you install on each of your hosts, and then you point your web browser to the IP address or FQDN with a “/ui” at the end, this will bring you to a host based web client that is fantastic to use for managing an ESXi host outside of vCenter, from within this client you can place the host into maintenance mode from the Actions tab, and also shut the host down from the same tab.

One of the other options and I would highly recommend this option is the VMware Host Client, this is basically a vib you install on each of your hosts, and then you point your web browser to the IP address or FQDN with a “/ui” at the end, this will bring you to a host based web client that is fantastic to use for managing an ESXi host outside of vCenter, from within this client you can place the host into maintenance mode from the Actions tab, and also shut the host down from the same tab.

One of the things I noticed when placing a host into maintenance mode here is that it did not present me with any options with regards to what to do with the data, the default behaviour here is “Ensure Accessibility”, due to the nature of this setting, this will prevent you putting all of your hosts into maintenance mode, so with the last hosts remaining, there are two choices

- Log into the hosts via SSH and perform the command mentioned earlier

- Shut the hosts down without entering maintenance mode

Since there are no running virtual machines, and the way Virtual SAN stores the disk objects there is no risk to data by doing either option, after all some power outages are unscheduled and Virtual SAN recovers from those seamlessly.

Step 4 – Power Down the ESXi hosts

Once all the hosts have been placed into maintenance mode we now need to power down any hosts that are still powered on from the previous step, this can be done without vCenter a number of ways:

- Direct console access or via a remote management controller such as ILO/iDRAC/BMC

- SSH access by running the command “dcui” and following the function keys to shutdown the system

- From the ESXi command line by running the following command

# esxcli system shutdown poweroff -r Scheduled

- From the VMware Host Client as directed in the previous step

That’s it, your cluster is now correctly shut down, after your scheduled outage is over, the task of bringing up your cluster is straight forward

- Power up the hosts

- Use SSH or the VMware Host Client to exit maintenance mode

- Power up the vCenter server

- Power up the other virtual machines

Just a quick note, during the powering on of the ESXi hosts, Virtual SAN will check all the data objects, so whilst these checks are performed some objects or VMs may report as inaccessible from the vCenter UI, once Virtual SAN has performed its checks, the VMs will be readily available again.