As the core density increases on a CPU it opens up the opportunity to consolidate the number of nodes required in any given cluster, but in a vSAN cluster, node consolidation has a negative effect on available IOPS, if you think about how each node provides a specific amount of IOPS, lowering the number of hosts in the cluster removes the IOPS capability of the nodes you are consolidating by, take the following for example:

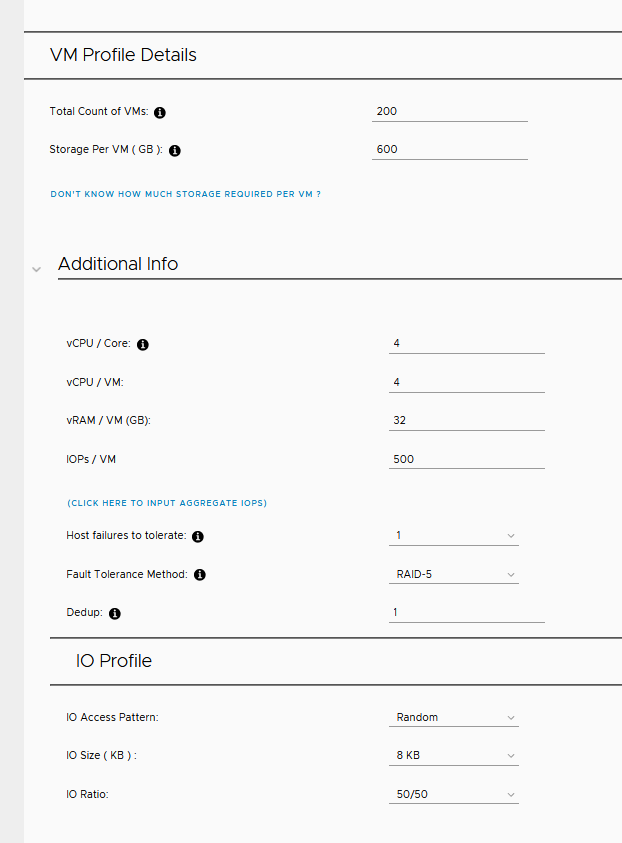

Number of VMs : 200

vCPU Per VM : 4

Virtual Memory per VM : 32GB

Storage per VM : 600GB

vCPU to Core Ratio : 4 to 1

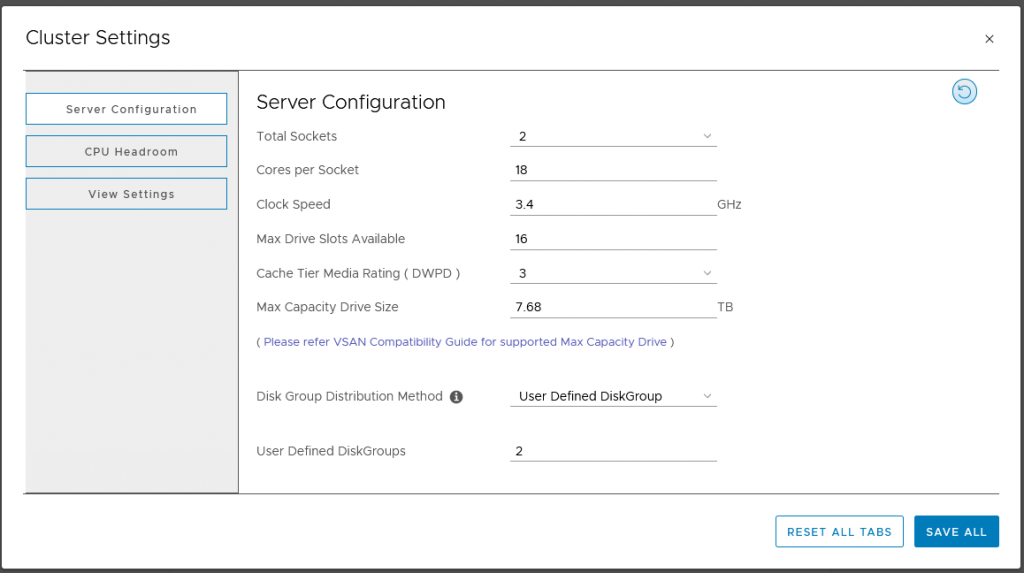

Now for the purpose of this sizing excersize I am going to use the vSAN Sizing tool and apply some cluster settings as per below:

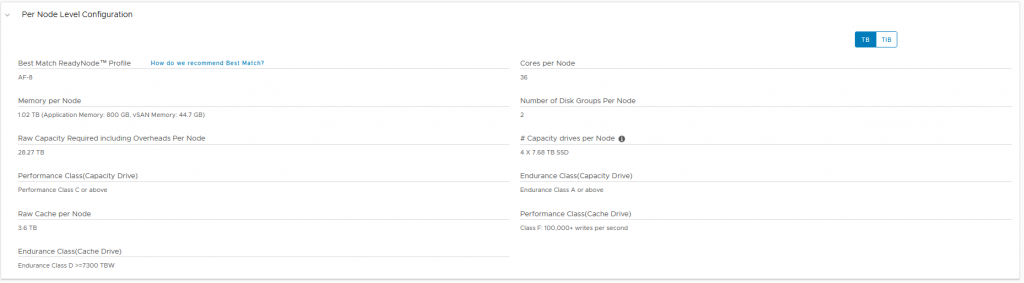

So in the above scenario, the number of cores per CPU is 18, and I want to ensure that this is a two disk group configuration, if we then input the workload details as per below:

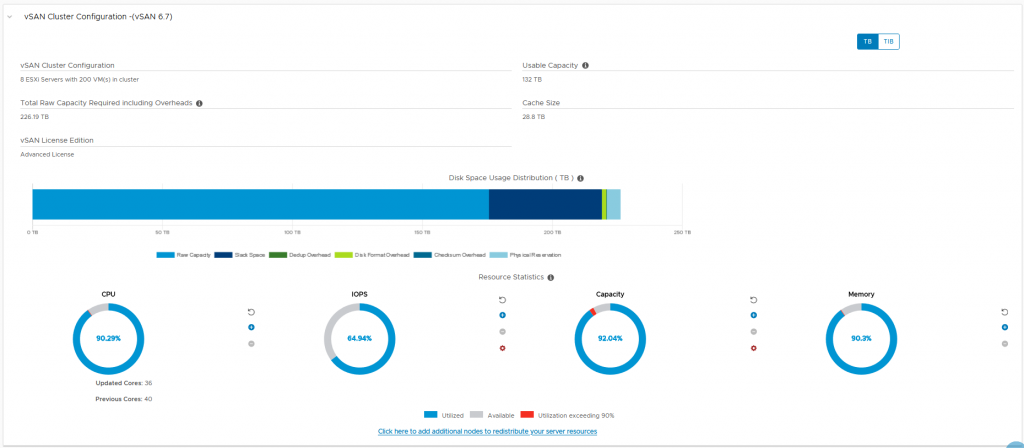

You will see when we click on recommendation that it shows a required node count of 8 (not taking into account any N+1 capability as we left that as 0 for the purpose of this sizing)

And we can see the disk config below:

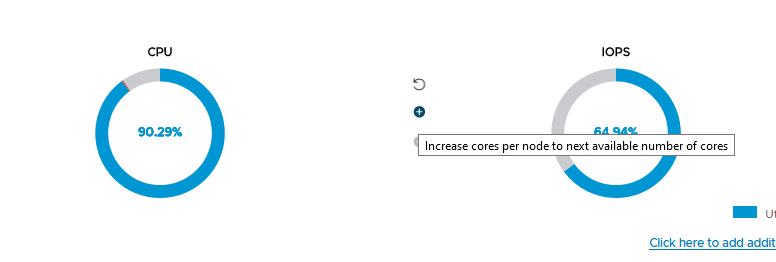

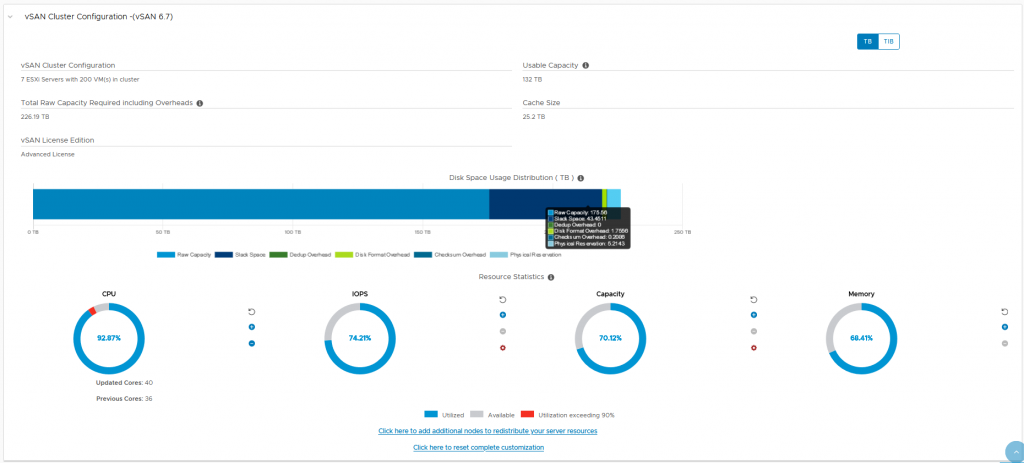

However, if we increase the number of CPU Cores to 20 by clicking on the “+” in the sizing output we can see that it changes the number of nodes

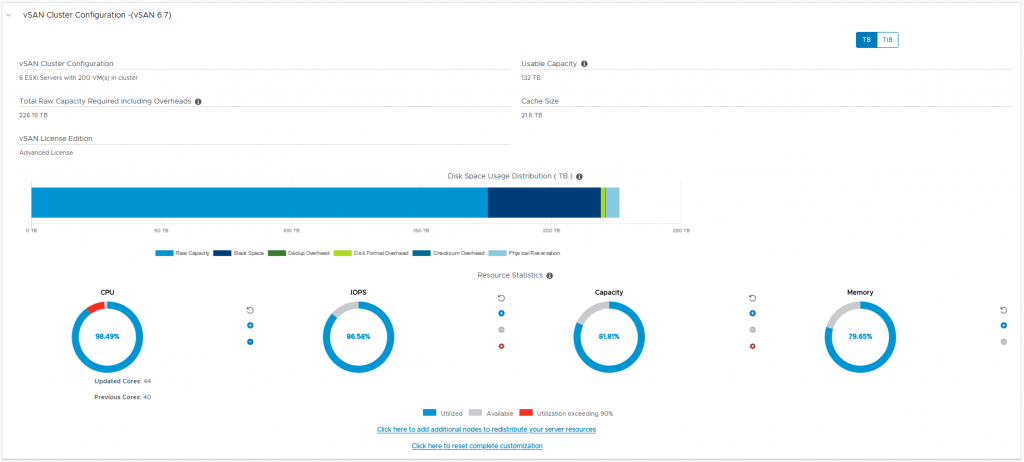

And again if we increase the number of cores again to 22 we get a further reduction in the number of nodes to 6

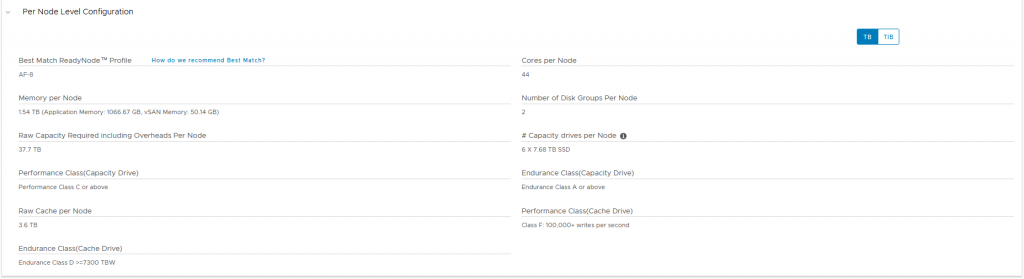

The sizing tool will dynamically increase or decrease the number of disks required per host as well as the RAM per node that is required as you can see here:

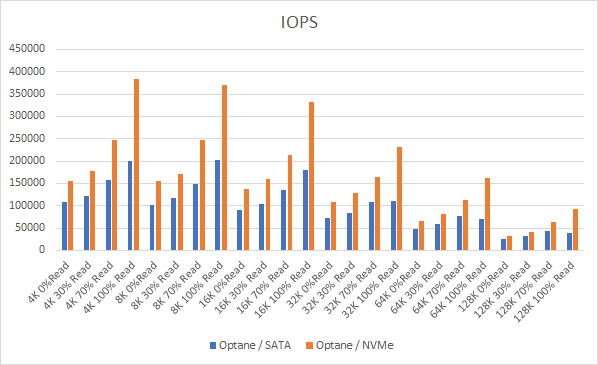

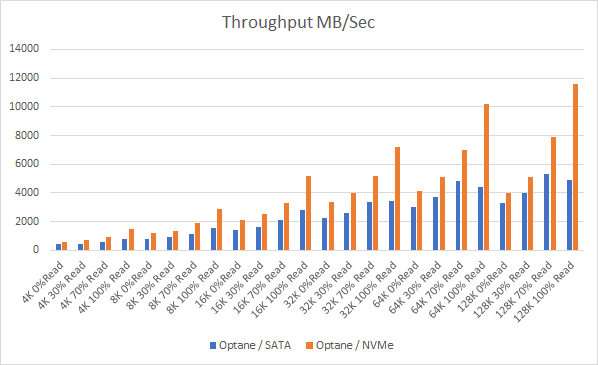

But one thing we have not factored in here is the decrease in IOPS Capability that reducing by two nodes , if say for example each node was capable of 80K IOPS, reducing the node count by two means you have just lost 160K IOPS Capability, so what can we do to mitigate that?

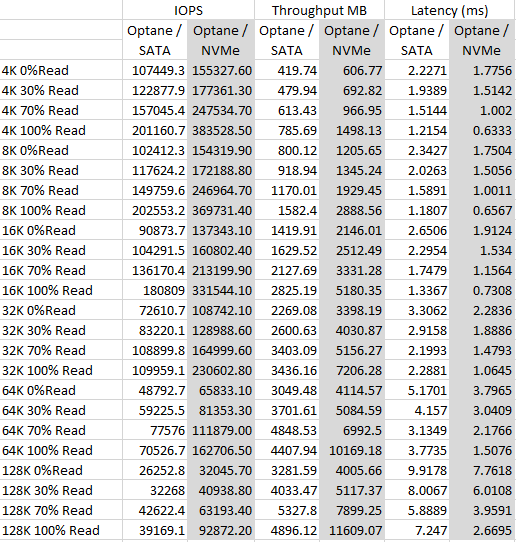

Well instead of using SAS/SATA SSDs in your vSAN design, you could opt to use Intel Optane for Cache, and NAND based NVMe drives for capacity.

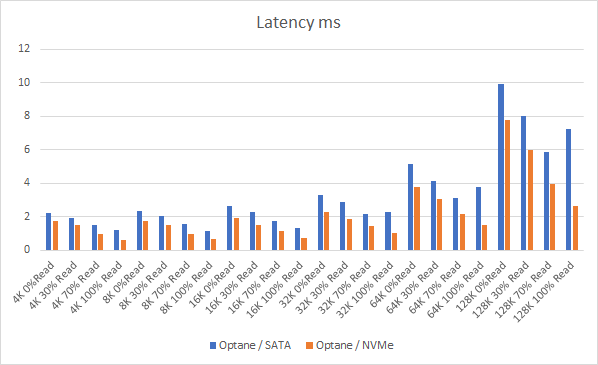

For write operations, Intel Optane greatly improves on write performance as I have written about before, but also read performance is greatly accelerated because the capacity devices are NVMe, so therefore reducing your node count by two in this case and utilising this kind of technology means you still get similar levels of performance, the best part is, the overall solution will cost you less too, so your TCO comes down which is good for your finance department right?

One question I get asked frequently is what size Optane device is sufficient?

Well in all of my testing, I very rarely saturated the write buffer even with 375GB Optane drives as cache devices, the reason for this is because vSAN starts to perform de-staging from the cache tier to the capacity tier when the write buffer becomes around 30% Full, and because the capacity tier it NVMe based, the de-staging happens a lot quicker, especially since vSAN 6.7 U3 where the de-stage limits have been removed.

So when would a 750GB Optane be useful?

High write intensive workloads such as Video Surveilance and Databases, or when your capacity disks are much slower, Optane can still be used in vSAN Configurations where the Capacity Tier is SAS/SATA which of course are not as fast as most NVMe devices so the write buffer can get more full.

So just to re-cap, you can save money on your vSAN deployments by consolidating hosts with higher core count CPUs as well as leveraging newer technology such as Intel Optane in the Cache Tier and NVMe in the capacity tier thus saving money whilst maintaining same level of performance or better, what’s not to like?