As we all know there are a number of ways of scaling capacity in a vSAN environment, you can add disks to existing hosts and scale the storage independently of compute, or you can add nodes to the cluster and scale both the storage and compute together, but what if you are in a situation where you do not have any free disk slots available, and / or you are unable to add more nodes to the existing cluster? Well vSAN 7.0U1 comes with a new feature called vSAN HCI Mesh, so what does this mean and how does it work?

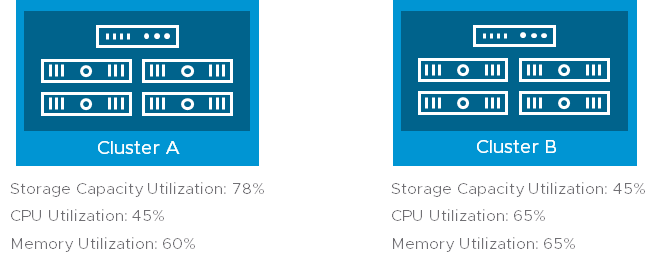

Let’s take the scenario below, we have two vSAN clusters in the same vCenter, Cluster A is nearing capacity from a storage perspective, but the compute is relatively under utilised, there are no available disk slots to expand out the storage. Cluster B on the other hand has a lot of free storage capacity but is more utilised on the compute side of things:

Now the vSAN HCI Mesh will allow you to consume storage on a remote vSAN cluster providing it exists within the same vCenter inventory, there are no special hardware / software requirements (apart from 7.0U1) and the traffic will leverage the existing vSAN network traffic configuration.

This cool feature adds an elastic capability to vSAN Clusters, especially if you need to have some additional temporary capacity for application refactoring or service upgrade where you want to deploy the new services but keep the old one operational until the transition is made.

VMware has not left the monitoring capabilities of such use out either, in the UI you can monitor the usage of “Remote VM” from a capacity perspective as well as within the performance service

So this clearly allows dissagregation of storage and compute in a vSAN environment and offers that flexibility and elasticity of storage consumption are there any limitations?

- A vSAN cluster can only mount up to 5 remote vSAN Datastores

- The vSAN Cluster must be able to access the other vSAN cluster(s) via the vSAN Network

- vSphere and vCenter must be running 7.0U1 or later

- Enterprise and Enterprise Plus editions of vSAN

- Enough hosts / configuration to support storage policy, for example if your remote cluster has only four hosts, you cannot use a policy which requires RAID6

So this is a pretty cool feature and sort of elliminates the need for Storage Only vSAN nodes which was discussed in the past at many VMworlds