It has been a personal project due to a few people asking me in working out how to create and manipulate vSAN Storage Policies from the new PowerCLI commandlets introduced in PowerCLi 6.5, the tasks I will cover here will be

- Creating a Brand New Storage Policy

- Changing an existing storage policy

For the purpose of this excersise I am using PowerCLI 6.5 Release 1 build 4624819 I owuld recommend you use that build or newer as some of the commands do not work as intended on previous PowerCLI builds.

Creating a Storage Policy

Before we proceed with creating a storage policy, you need to ensure you are logged into your vCenter server via PowerCLI and get a list of capabilities from the storage provider, for this we run the command Get-SpbmCapability and filter out the VSAN Policy capabilities

C:\> Get-SpbmCapability VSAN* Name ValueCollectionType ValueType AllowedValue ---- ------------------- --------- ------------ VSAN.cacheReservation System.Int32 VSAN.checksumDisabled System.Boolean VSAN.forceProvisioning System.Boolean VSAN.hostFailuresToTolerate System.Int32 VSAN.iopsLimit System.Int32 VSAN.proportionalCapacity System.Int32 VSAN.replicaPreference System.String VSAN.stripeWidth System.Int32

For the definitions of the values associated with each definition:-

System.Int32 = Numerical Value

System.Boolean = True or False

System.String = “RAID-1 (Mirroring) – Performance” or “RAID-5/6 (Erasure Coding) – Capacity”

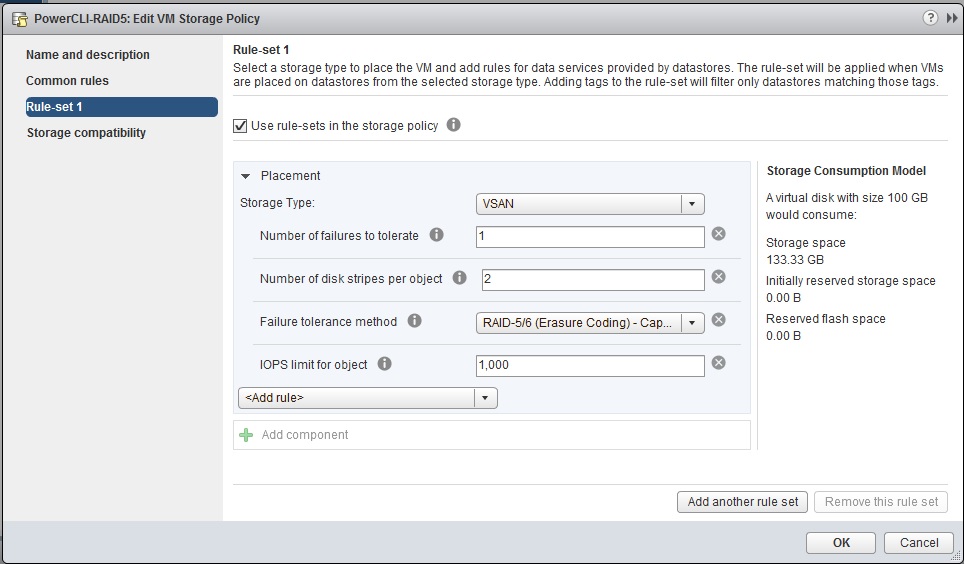

So I want to create a Policy with the following Definitions

Policy Name: PowerCLI-RAID5

Policy Description: RAID5 Policy Created with PowerCLI

Failures to Tolerate: 1

Failures to Tolerate Method: RAID5

Stripe Width: 2

IOPS Limit: 1000

To create a policy with the above specification we need to run the following command:

New-SpbmStoragePolicy -Name PowerCLI-RAID5 -Description "RAID5 Policy Created with PowerCLI" `

-AnyOfRuleSets `

(New-SpbmRuleSet `

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.hostFailuresToTolerate" ) -Value 1),`

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.stripeWidth" ) -Value 2),`

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.replicaPreference" ) -Value "RAID-5/6 (Erasure Coding) - Capacity"),`

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.iopsLimit" ) -Value 1000)`

)

We can then check for our policy by running the following command:

C:\> Get-SpbmStoragePolicy -Name "PowerCLi-RAID5" |Select-Object -Property * CreatedBy : Temporary user handle CreationTime : 11/04/2017 13:42:36 Description : RAID5 Policy Created with PowerCLI LastUpdatedBy : Temporary user handle LastUpdatedTime : 11/04/2017 13:42:36 Version : 0 PolicyCategory : REQUIREMENT AnyOfRuleSets : {(VSAN.hostFailuresToTolerate=1) AND (VSAN.stripeWidth=2) AND (VSAN.replicaPreference=RAID-5/6 (Erasure Coding) - Capacity) AND (VSAN.iopsLimit=1000)} CommonRule : {} Name : PowerCLI-RAID5 Id : 9f8f61f4-24db-4513-b48f-a48c584f0eac Client : VMware.VimAutomation.Storage.Impl.V1.StorageClientImpl

And from the UI We can see our storage policy:

That’s our policy created, now we’ll move onto changing an existing policy.

Changing an existing storage policy

The policy we created earlier, if we suddenly decide that we want RAID 6 rather than RAID 5, we have to change the Failure To Tolerate number to 2, and we will also want to change our policy name and description as it will no longer be RAID5. In order to change a policy, we have to ensure we specify the other values for the other policy definitions, if we do not specify the policy definitions that we do not want to be changed, they will be removed from the storage policy, so first we run the command that tells us what our policy definition is:

C:\> Get-SpbmStoragePolicy -Name "PowerCLi-RAID5" |Select-Object -Property * CreatedBy : Temporary user handle CreationTime : 11/04/2017 13:42:36 Description : RAID5 Policy Created with PowerCLI LastUpdatedBy : Temporary user handle LastUpdatedTime : 11/04/2017 13:42:36 Version : 0 PolicyCategory : REQUIREMENT AnyOfRuleSets : {(VSAN.hostFailuresToTolerate=1) AND (VSAN.stripeWidth=2) AND (VSAN.replicaPreference=RAID-5/6 (Erasure Coding) - Capacity) AND (VSAN.iopsLimit=1000)} CommonRule : {} Name : PowerCLI-RAID5 Id : 9f8f61f4-24db-4513-b48f-a48c584f0eac Client : VMware.VimAutomation.Storage.Impl.V1.StorageClientImpl

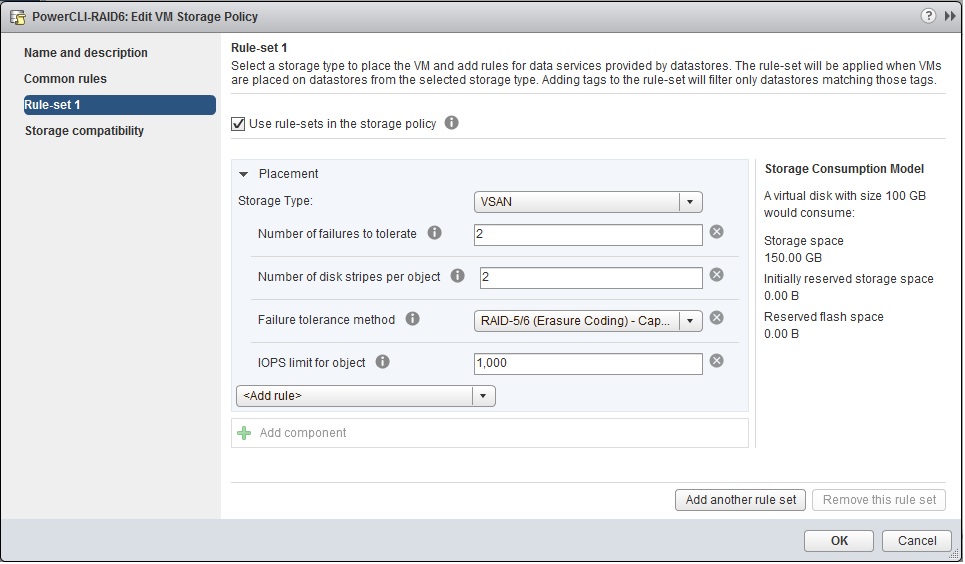

So we need to make the following changes to the policy

- Change the Failure to Tolerate value from 1 to 2

- Change the Name of the Policy from PowerCLI-RAID5 to PowerCLI-RAID6

- Change the Description of the policy from “RAID5 Policy Created with PowerCLI” to “RAID6 Policy Changed with PowerCLI”

We run the following command which specified the new values, and also the values we do not want to change:

Set-SpbmStoragePolicy -StoragePolicy PowerCLI-RAID5 -Name PowerCLI-RAID6 -Description "RAID6 Policy Changed with PowerCLI" `

-AnyOfRuleSets `

(New-SpbmRuleSet `

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.hostFailuresToTolerate" ) -Value 2),`

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.stripeWidth" ) -Value 2),`

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.replicaPreference" ) -Value "RAID-5/6 (Erasure Coding) - Capacity"),`

(New-SpbmRule -Capability (Get-SpbmCapability -Name "VSAN.iopsLimit" ) -Value 1000)`

)

We can verify the policy has been changed by running the command with the new policy name:

C:\> Get-SpbmStoragePolicy -Name "PowerCLi-RAID6" |Select-Object -Property * CreatedBy : Temporary user handle CreationTime : 11/04/2017 13:42:36 Description : RAID6 Policy Changed with PowerCLI LastUpdatedBy : Temporary user handle LastUpdatedTime : 11/04/2017 14:03:51 Version : 2 PolicyCategory : REQUIREMENT AnyOfRuleSets : {(VSAN.hostFailuresToTolerate=2) AND (VSAN.stripeWidth=2) AND (VSAN.replicaPreference=RAID-5/6 (Erasure Coding) - Capacity) AND (VSAN.iopsLimit=1000)} CommonRule : {} Name : PowerCLI-RAID6 Id : 9f8f61f4-24db-4513-b48f-a48c584f0eac Client : VMware.VimAutomation.Storage.Impl.V1.StorageClientImpl

And again in the UI we can see the changes made

That’s how you change an existing storage policy, I hope this blog helps you manage your storage policies via PowerCLI, thanks go out to Pushpesh in VMware for showing me the error of my ways when trying to figure this out myself.